Robot Boxing in China: Show or Strategic Signal?

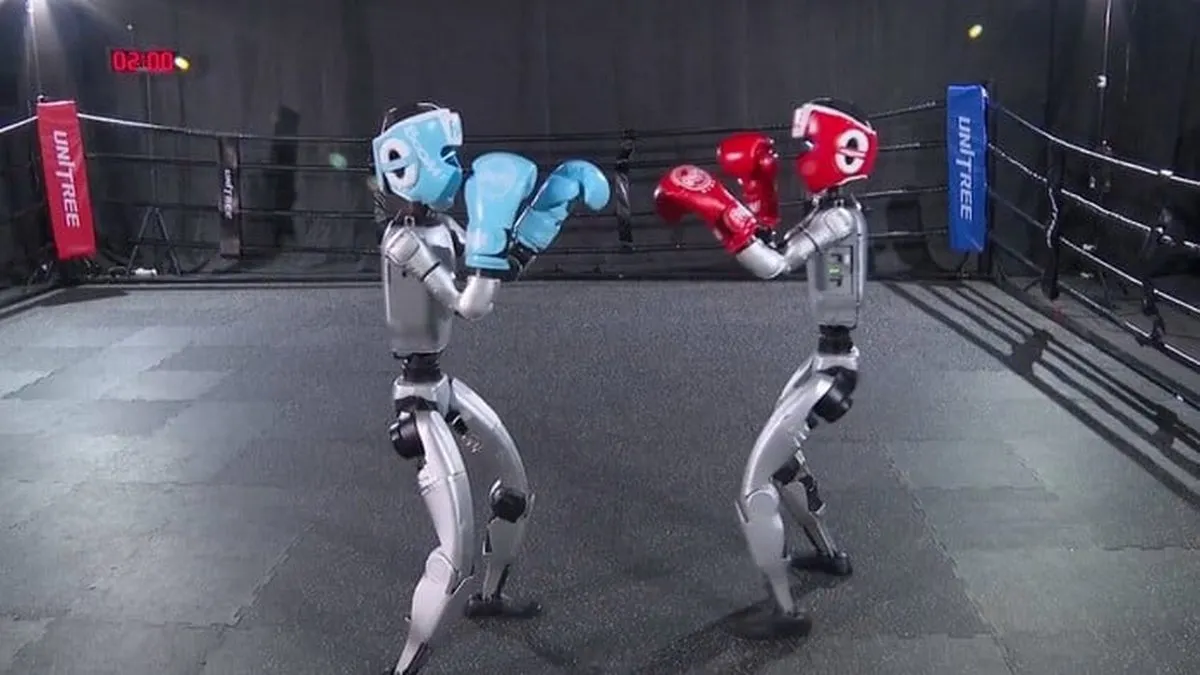

In May 2025, footage went viral that looked like something out of a sci-fi movie: humanoid robots stepping into a boxing ring in Hangzhou, China, trading blows, jumping, spinning — and knocking each other out. One fell and didn’t get up. The match was part of the first-ever Mecha Fighting Series, organized by China Media Group and presented during the World Robot Competition.

But beneath the metallic punches and crowd-pleasing visuals lies a reality that’s anything but entertaining. The event was not merely a showcase — it was a live demonstration of how far China has advanced in merging artificial intelligence with physical force. And it raises questions the global tech community, ethicists, and the general public can no longer afford to ignore.

Muscle, AI, and Control

The tournament featured G1 humanoid robots from Unitree Robotics — 1.32 meters tall, 35 kilograms of steel, sensors, and calibrated aggression. While the public saw a show, engineers saw something else entirely: a complex testing ground for robot balance, combat adaptation, physical feedback systems, and real-time decision-making.

The robots were remotely operated, combining joystick control with voice commands, but the matches also tested semi-autonomous response routines — evaluating how robots handle unpredictable environments and aggressive physical interactions. In other words, this was less entertainment, more military-grade stress testing.

When Spectacle Becomes Soft Power

The event was heavily promoted by state media as a triumph of innovation and “Chinese smart manufacturing.” But it also functioned as a strategic tool of tech-driven soft power. While the West debates ethical boundaries and regulatory frameworks, China is presenting the future as already here — a future where humanoids can fight, fall, and rise again.

What seems like a harmless tournament may in fact serve three purposes:

- Testing for dual-use capabilities (civilian and military);

- Normalizing the public perception of combat-capable machines;

- Projecting technological dominance in an area still in early-stage development elsewhere.

This wasn’t just a contest. It was a message: we can build machines that move like humans and hit like fighters — and they work.

The Ethics of Combat-Ready Machines

No matter how technologically sophisticated, the concept of humanoids trained to punch — and knock each other out — is deeply unsettling. Even if the fights were controlled, the precedent is dangerous.

In a world where violence is a persistent human failure, teaching robots to replicate that behavior — for testing, sport, or spectacle — walks an increasingly blurry ethical line.

Once machines learn to strike, the only remaining question is who gives the order — and who becomes the target.

This shift is not just about hardware. It’s about what kind of relationship society is building with automation, and whether aggression by machines will be normalized under the banner of progress.

Relevant

A Glimpse of What’s Next

China has already announced the next stage: in December 2025, life-sized humanoid fighters will take the ring. That means stronger, faster, more autonomous machines — closer to the human form and, perhaps, human function.

The implications are vast. Today, it’s a robotic tournament. Tomorrow, it could be automated border enforcement, police patrols, or battlefield scenarios.

And while the world watches in awe or amusement, few are asking the more important question: what are we agreeing to, simply by not objecting?

Final Thoughts: The Cost of Admiring Efficiency

Technology in itself is not the problem. But how we choose to apply it is. When robots are trained to fight, and their abilities are met with applause rather than alarm, we risk crossing a line — from innovation into weaponization of the ordinary.

These robots weren’t designed just to fall theatrically. They were designed to keep fighting.

And that says more about our future than any press release ever will.